What Is BERT?

Because the inception of Google, Google has been at all times making an attempt to make search higher for the person when it comes to the standard of search outcomes and the show of the search outcomes on the search outcomes Web page (The SERPS).

The standard of search outcomes can solely be higher if the search question is known accurately by the search engine. Many occasions the person additionally finds it troublesome to formulate a search question to precisely meet his requirement. The person may spell it in a different way or could not know the fitting phrases to make use of for search. This makes it tougher for the search engine to show related outcomes.

“At its core, Search is about understanding language. It’s our job to determine what you’re trying to find and floor useful data from the net, regardless of the way you spell or mix the phrases in your question. Whereas we’ve continued to enhance our language understanding capabilities over time, we typically nonetheless don’t fairly get it proper, significantly with complicated or conversational queries. In reality, that’s one of many the reason why individuals typically use “keyword-ese,” typing strings of phrases that they assume we’ll perceive, however aren’t truly how they’d naturally ask a query.”

In 2018, Google opensourced a brand new approach for pure language processing (NLP) pre-training known as Bidirectional Encoder Representations from Transformers, or BERT. With this launch, anybody on the earth can practice their very own state-of-the-art query answering system (or quite a lot of different fashions).

This permits the understanding of the relation and context of the phrases i.e tries to grasp the which means of the phrases within the search question reasonably than do phrase to phrase mapping earlier than displaying the search outcomes.

This not solely requires the development within the software program but in addition the {hardware} used must be a lot superior. So, for the primary time Google is utilizing the most recent Cloud TPUs to serve search outcomes and get you extra related data rapidly.

By implementing BERT Google will be capable to perceive higher 1 in 10 searches in US in English. Google intends to convey this to extra languages in future. The primary objective behind that is to grasp the correlation of the prepositions like ‘to’ and different such phrases within the search question and set up an accurate context to show related search outcomes.

Earlier than launching and implementing BERT for search on a large scale and for a lot of languages Google is testing and making an attempt to grasp the intent behind the search question fired by the person.

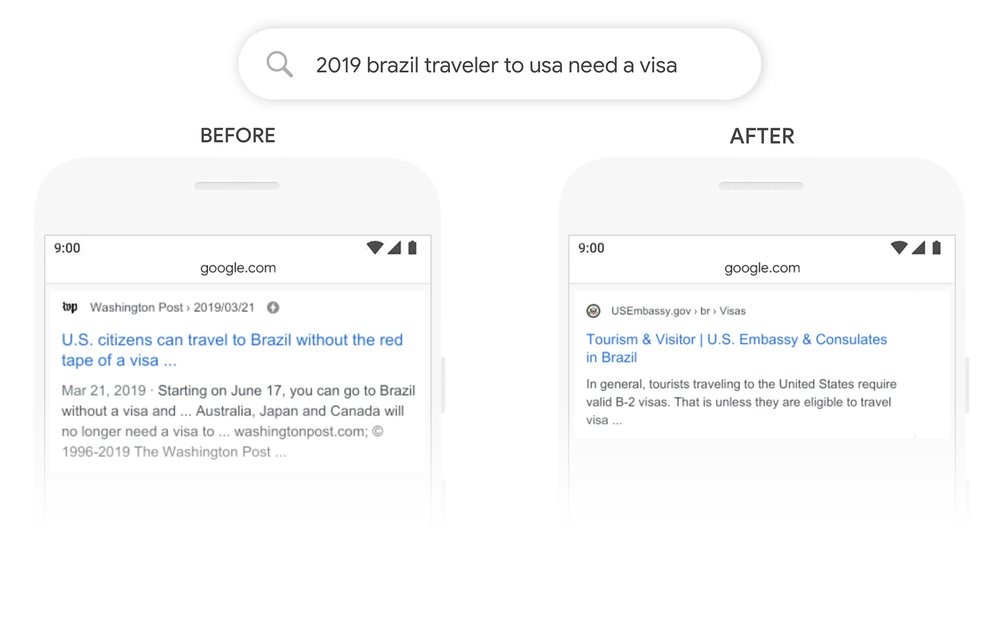

Google has shared some examples as under:

Right here’s a seek for “2019 brazil traveler to usa want a visa.” The phrase “to” and its relationship to the opposite phrases within the question are significantly necessary to understanding the which means. It’s a few Brazilian touring to the U.S., and never the opposite manner round. Beforehand, our algorithms wouldn’t perceive the significance of this connection, and we returned outcomes about U.S. residents touring to Brazil. With BERT, Search is ready to grasp this nuance and know that the quite common phrase “to” truly issues rather a lot right here, and we will present a way more related outcome for this question.

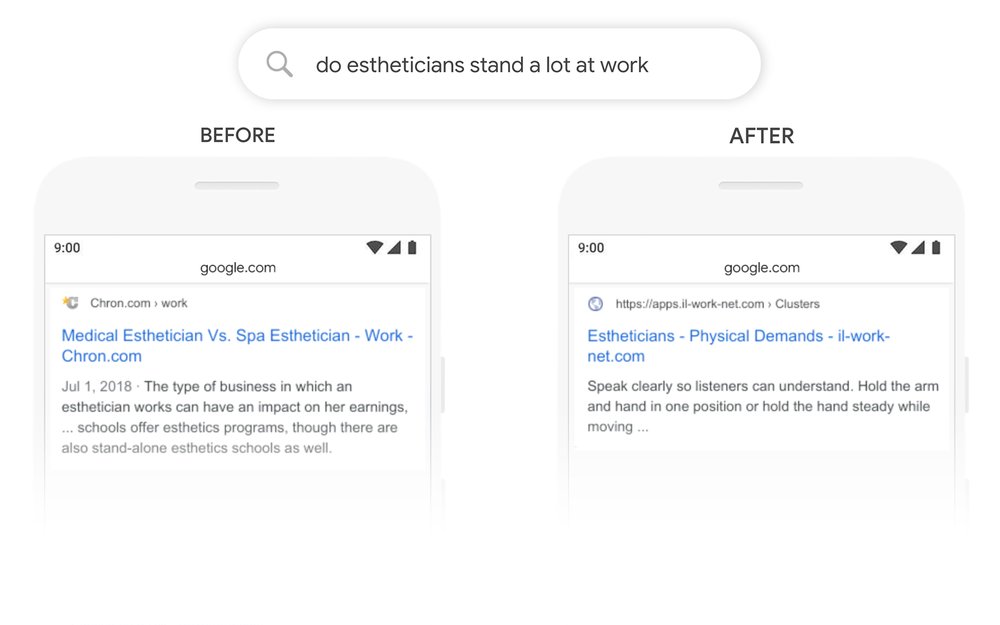

Let’s have a look at one other question: “do estheticians stand rather a lot at work.” Beforehand, our techniques had been taking an strategy of matching key phrases, matching the time period “stand-alone” within the outcome with the phrase “stand” within the question. However that isn’t the fitting use of the phrase “stand” in context. Our BERT fashions, alternatively, perceive that “stand” is said to the idea of the bodily calls for of a job, and shows a extra helpful response.

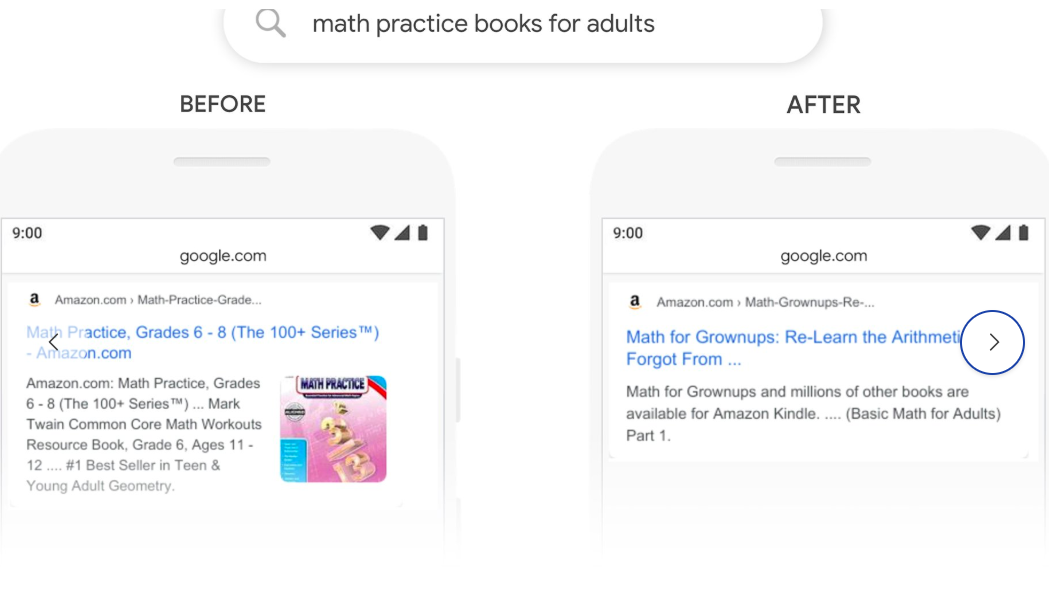

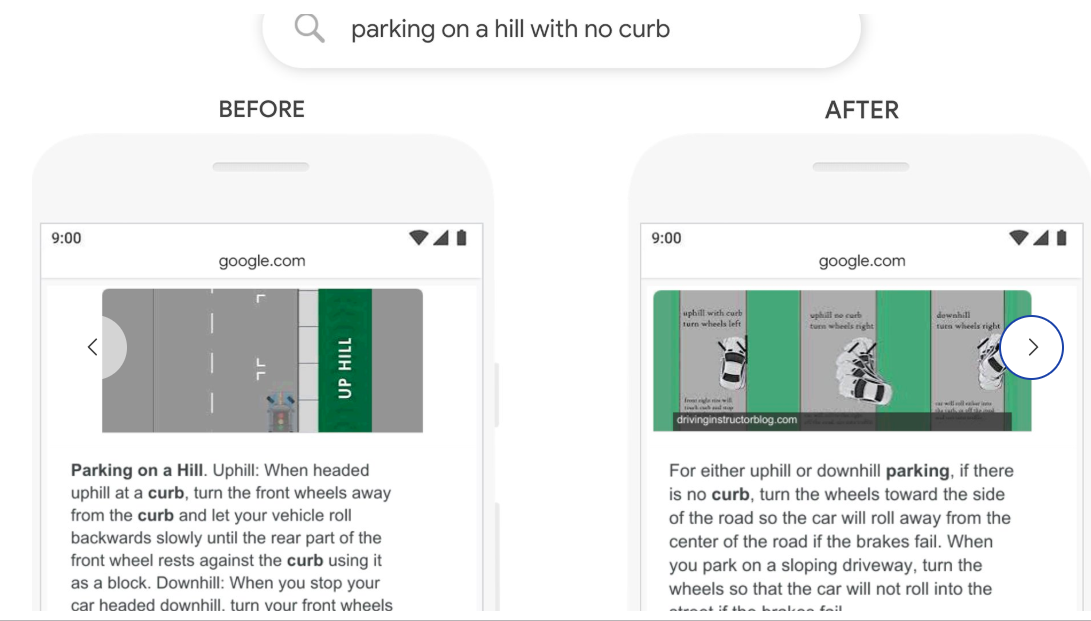

Some extra examples concerning the nuances of the language which often aren’t correlated accurately by the various search engines for related search outcomes.

The above examples of earlier than and after the implementation of BERT clearly present the development within the search outcomes. That is additionally being utilized to featured snippets.

BERT and search engine marketing – Do we have to optimize for BERT?

After studying this, I’m positive the SEOs have essentially the most logical query – How Do We Optimize for BERT ?

The plain and easy reply is – We don’t have to optimize in a different way for BERT. We simply want so as to add extra informative related content material to get a extra focused and intensive search presence.

Look what the search engine marketing consultants need to say:

There’s nothing to optimize for with BERT, nor something for anybody to be rethinking. The basics of us searching for to reward nice content material stay unchanged.

— Danny Sullivan (@dannysullivan) October 28, 2019

In a current hangout John Mueller added to what Danny had tweeted relating to BERT. (Learn Tweet embedded above)

Right here was the query posed to John Mueller:

Will you inform me concerning the Google BERT Replace? Which sorts of work can I do on search engine marketing based on the BERT algorithms?

John Muller’s rationalization on the aim of the BERT algorithm:

I’d primarily advocate looking on the weblog publish that we did round this specific change. Particularly, what we’re making an attempt to do with these adjustments is to higher perceive textual content. Which on the one hand means higher understanding the questions or the queries that individuals ship us. And alternatively higher understanding the textual content on a web page. The queries aren’t actually one thing that you could affect that a lot as an search engine marketing.

The textual content on the web page is one thing that you could affect. Our advice there’s primarily to write down naturally. So it appears sort of apparent however lots of these algorithms attempt to perceive pure textual content and so they attempt to higher perceive like what matters is that this web page about. What particular attributes do we have to be careful for and that will permit use to higher match the question that somebody is asking us along with your particular web page. So, if something, there’s something that you are able to do to sort of optimize for BERT, it’s primarily to make it possible for your pages have pure textual content on them…

“..and that they’re not written in a manner that…”

“Sort of like a standard human would be capable to perceive. So as an alternative of stuffing key phrases as a lot as doable, sort of write naturally.”

We as web site homeowners and SEOs have to grasp that Google consistently retains on working to make search and the search expertise higher for its customers. BERT is one such train in that course.

It isn’t an algorithmic replace immediately affecting any of the on-page , off-page or technical components. BERT is solely aiming to grasp and corelate the search question extra precisely.

In accordance with Google : Language understanding stays an ongoing problem and regardless of how arduous they work in understanding the search queries higher, they’re at all times bombarded with surprises with time to time and this makes them exit of their consolation zone once more.

As BERT tries to grasp search queries higher and thereby tries to present extra related outcomes, the search engine marketing components don’t get immediately influenced by its implementation. The one factor that must be thought-about is the standard content material which must be repeatedly added to the positioning to maintain it related and corelate to increasingly search queries.

January 20, 2020

#Understanding #BERT #Optimize #BERT