Microsoft revealed a analysis examine that demonstrates how superior prompting strategies may cause a generalist AI like GPT-4 to carry out in addition to or higher than a specialist AI that’s skilled for a selected subject. The researchers found that they may make GPT-4 outperform Google’s specifically skilled Med-PaLM 2 mannequin that was explicitly skilled in that subject.

Superior Prompting Methods

The outcomes of this analysis confirms insights that superior customers of generative AI have found and are utilizing to generate astonishing photos or textual content output.

Superior prompting is generally called immediate engineering. Whereas some might scoff that prompting may be so profound as to warrant the identify engineering, the actual fact is that superior prompting strategies are primarily based on sound ideas and the outcomes of this analysis examine underlines this truth.

For instance, a way utilized by the researchers, Chain of Thought (CoT) reasoning is one which many superior generative AI customers have found and used productively.

Chain of Thought prompting is a technique outlined by Google round Could 2022 that permits AI to divide a process into steps primarily based on reasoning.

I wrote about Google’s analysis paper on Chain of Thought Reasoning which allowed an AI to interrupt a process down into steps, giving it the flexibility to resolve any form of phrase issues (together with math) and to attain commonsense reasoning.

These principals ultimately labored their means into how generative AI customers elicited prime quality output, whether or not it was creating photos or textual content output.

Peter Hatherley (Fb profile), founding father of Authored Intelligence net app suites, praised the utility of chain of thought prompting:

“Chain of thought prompting takes your seed concepts and turns them into one thing extraordinary.”

Peter additionally famous that he incorporates CoT into his customized GPTs with the intention to supercharge them.

Chain of Thought (CoT) prompting advanced from the invention that asking a generative AI for one thing isn’t sufficient as a result of the output will persistently be lower than very best.

What CoT prompting does is to stipulate the steps the generative AI must take with the intention to get to the specified output.

The breakthrough of the analysis is that utilizing CoT reasoning plus two different strategies allowed them to attain beautiful ranges of high quality past what was identified to be doable.

This system known as Medprompt.

Medprompt Proves Worth Of Superior Prompting Methods

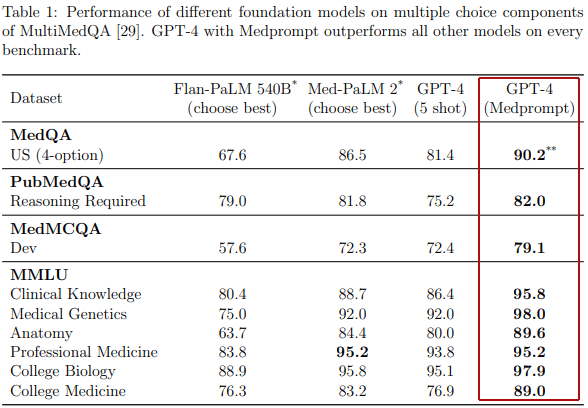

The researchers examined their approach towards 4 completely different basis fashions:

- Flan-PaLM 540B

- Med-PaLM 2

- GPT-4

- GPT-4 MedPrompt

They used benchmark datasets created for testing medical information. A few of these assessments had been for reasoning, some had been questions from medical board exams.

4 Medical Benchmarking Datasets

- MedQA (PDF)

A number of alternative query answering dataset - PubMedQA (PDF)

Sure/No/Perhaps QA Dataset - MedMCQA (PDF)

Multi-Topic Multi-Selection Dataset - MMLU (Large Multitask Language Understanding) (PDF)

This dataset consists of 57 duties throughout a number of domains contained throughout the matters of Humanities, Social Science, and STEM (science, expertise, engineering and math).

The researchers solely used the medical associated duties reminiscent of scientific information, medical genetics, anatomy, skilled medication, school biology and school medication.

GPT-4 utilizing Medprompt completely bested all of the opponents it was examined towards throughout all 4 medical associated datasets.

Desk Reveals How Medprompt Outscored Different Basis Fashions

Why Medprompt is Necessary

The researchers found that utilizing CoT reasoning, along with different prompting methods, might make a common basis mannequin like GPT-4 outperform specialist fashions that had been skilled in only one area (space of information).

What makes this analysis particularly related for everybody who makes use of generative AI is that the MedPrompt approach can be utilized to elicit prime quality output in any information space of experience, not simply the medical area.

The implications of this breakthrough is that it might not be essential to expend huge quantities of sources coaching a specialist massive language mannequin to be an professional in a selected space.

One solely wants to use the ideas of Medprompt with the intention to acquire excellent generative AI output.

Three Prompting Methods

The researchers described three prompting methods:

- Dynamic few-shot choice

- Self-generated chain of thought

- Selection shuffle ensembling

Dynamic Few-Shot Choice

Dynamic few-shot choice allows the AI mannequin to pick related examples throughout coaching.

Few-shot studying is a means for the foundational mannequin to study and adapt to particular duties with only a few examples.

On this methodology, fashions study from a comparatively small set of examples (versus billions of examples), with the main focus that the examples are consultant of a variety of questions related to the information area.

Historically, consultants manually create these examples, nevertheless it’s difficult to make sure they cowl all prospects. Another, referred to as dynamic few-shot studying, makes use of examples which are much like the duties the mannequin wants to resolve, examples which are chosen from a bigger coaching dataset.

Within the Medprompt approach, the researchers chosen coaching examples which are semantically much like a given take a look at case. This dynamic method is extra environment friendly than conventional strategies, because it leverages present coaching knowledge with out requiring intensive updates to the mannequin.

Self-Generated Chain of Thought

The Self-Generated Chain of Thought approach makes use of pure language statements to information the AI mannequin with a sequence of reasoning steps, automating the creation of chain-of-thought examples, which frees it from counting on human consultants.

The analysis paper explains:

“Chain-of-thought (CoT) makes use of pure language statements, reminiscent of “Let’s assume step-by-step,” to explicitly encourage the mannequin to generate a sequence of intermediate reasoning steps.

The method has been discovered to considerably enhance the flexibility of basis fashions to carry out complicated reasoning.

Most approaches to chain-of-thought middle on using consultants to manually compose few-shot examples with chains of thought for prompting. Quite than depend on human consultants, we pursued a mechanism to automate the creation of chain-of-thought examples.

We discovered that we might merely ask GPT-4 to generate chain-of-thought for the coaching examples utilizing the next immediate:

Self-generated Chain-of-thought Template## Query: {{query}} {{answer_choices}} ## Reply mannequin generated chain of thought rationalization Subsequently, the reply is [final model answer (e.g. A,B,C,D)]"

The researchers realized that this methodology might yield flawed outcomes (often known as hallucinated outcomes). They solved this drawback by asking GPT-4 to carry out a further verification step.

That is how the researchers did it:

“A key problem with this method is that self-generated CoT rationales have an implicit threat of together with hallucinated or incorrect reasoning chains.

We mitigate this concern by having GPT-4 generate each a rationale and an estimation of the more than likely reply to comply with from that reasoning chain.

If this reply doesn’t match the bottom fact label, we discard the pattern totally, beneath the idea that we can’t belief the reasoning.

Whereas hallucinated or incorrect reasoning can nonetheless yield the right remaining reply (i.e. false positives), we discovered that this straightforward label-verification step acts as an efficient filter for false negatives.”

Selection Shuffling Ensemble

An issue with a number of alternative query answering is that basis fashions (GPT-4 is a foundational mannequin) can exhibit place bias.

Historically, place bias is a bent that people have for choosing the highest selections in a listing of selections.

For instance, analysis has found that if customers are offered with a listing of search outcomes, most individuals have a tendency to pick from the highest outcomes, even when the outcomes are flawed. Surprisingly, basis fashions exhibit the identical habits.

The researchers created a way to fight place bias when the muse mannequin is confronted with answering a a number of alternative query.

This method will increase the variety of responses by defeating what’s referred to as “grasping decoding,” which is the habits of basis fashions like GPT-4 of selecting the more than likely phrase or phrase in a sequence of phrases or phrases.

In grasping decoding, at every step of producing a sequence of phrases (or within the context of a picture, pixels), the mannequin chooses the likeliest phrase/phrase/pixel (aka token) primarily based on its present context.

The mannequin makes a alternative at every step with out consideration of the affect on the general sequence.

Selection Shuffling Ensemble solves two issues:

- Place bias

- Grasping decoding

This the way it’s defined:

“To cut back this bias, we suggest shuffling the alternatives after which checking consistency of the solutions for the completely different kind orders of the a number of alternative.

Because of this, we carry out alternative shuffle and self-consistency prompting. Self-consistency replaces the naive single-path or grasping decoding with a various set of reasoning paths when prompted a number of instances at some temperature> 0, a setting that introduces a level of randomness in generations.

With alternative shuffling, we shuffle the relative order of the reply selections earlier than producing every reasoning path. We then choose essentially the most constant reply, i.e., the one that’s least delicate to alternative shuffling.

Selection shuffling has a further profit of accelerating the variety of every reasoning path past temperature sampling, thereby additionally bettering the standard of the ultimate ensemble.

We additionally apply this system in producing intermediate CoT steps for coaching examples. For every instance, we shuffle the alternatives some variety of instances and generate a CoT for every variant. We solely hold the examples with the right reply.”

So, by shuffling selections and judging the consistency of solutions, this methodology not solely reduces bias but in addition contributes to state-of-the-art efficiency in benchmark datasets, outperforming refined specifically skilled fashions like Med-PaLM 2.

Cross-Area Success By Immediate Engineering

Lastly, what makes this analysis paper unimaginable is that the wins are relevant not simply to the medical area, the approach can be utilized in any form of information context.

The researchers write:

“We notice that, whereas Medprompt achieves document efficiency on medical benchmark datasets, the algorithm is common goal and isn’t restricted to the medical area or to a number of alternative query answering.

We imagine the final paradigm of mixing clever few-shot exemplar choice, self-generated chain of thought reasoning steps, and majority vote ensembling may be broadly utilized to different drawback domains, together with much less constrained drawback fixing duties.”

This is a crucial achievement as a result of it implies that the excellent outcomes can be utilized on nearly any subject with out having to undergo the expense and time of intensely coaching a mannequin on particular information domains.

What Medprompt Means For Generative AI

Medprompt has revealed a brand new method to elicit enhanced mannequin capabilities, making generative AI extra adaptable and versatile throughout a spread of information domains for lots much less coaching and energy than beforehand understood.

The implications for the way forward for generative AI are profound, to not point out how this may increasingly affect the talent of immediate engineering.

Learn the brand new analysis paper:

Can Generalist Basis Fashions Outcompete Particular-Function Tuning? Case Examine in Medication (PDF)

Featured Picture by Shutterstock/Asier Romero

#Researchers #Prolong #GPT4 #Prompting #Methodology